AI consciousness

I recently came across a great post via Exponential View—apparently, a researcher at Anthropic estimates there's up to a 15% chance that Claude (Anthropic’s large language model and excellent competitor to ChatGPT) might already be conscious. The New York Times picked up the story, and consciousness researcher Anil Seth responded with what I found to be a compelling rebuttal.

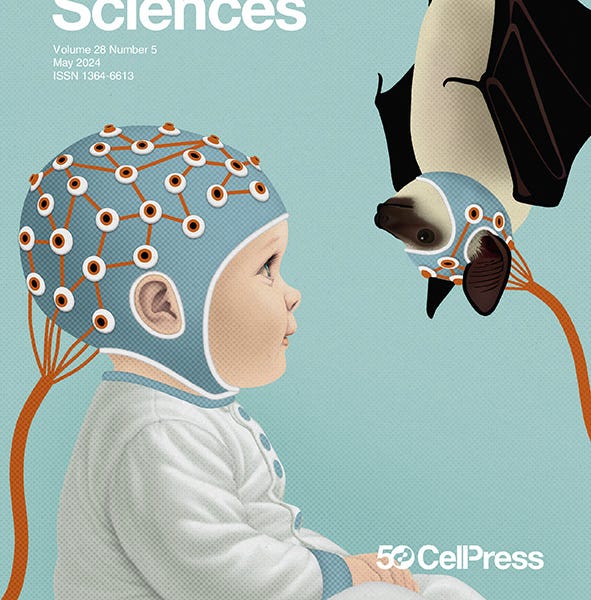

From my perspective, the idea that today’s LLMs like Claude could be conscious feels deeply premature. Why? Because the core of what these models are doing hasn't changed: they are static functions. Once trained, an LLM’s parameters are fixed. There’s no ongoing learning, no real-time adaptation or development of experience.

Sure, LLMs appear conversational and responsive, even thoughtful at times—but this is an illusion created by clever engineering. Each time you prompt the model, it returns a response based on the text history you’ve provided. What looks like memory is just re-submitted context. The model itself has no persistent state, no continuity of experience. It doesn’t remember anything once the session ends. It doesn’t think, “That was a great conversation—I’ll keep that in mind next time.”

In that sense, it’s still just a function: you put in some input x, and get an output f(x). That’s impressive engineering, but not a path to consciousness—not yet, anyway.